|

All 5 books, Edward Tufte paperback $180

All 5 clothbound books, autographed by ET $280

Visual Display of Quantitative Information

Envisioning Information

Visual Explanations

Beautiful Evidence

Seeing With Fresh Eyes

catalog + shopping cart

|

Edward Tufte e-books Immediate download to any computer: Visual and Statistical Thinking $5

The Cognitive Style of Powerpoint $5

Seeing Around + Feynman Diagrams $5

Data Analysis for Politics and Policy $9

catalog + shopping cart

New ET Book

Seeing with Fresh Eyes:

catalog + shopping cart

Meaning, Space, Data, Truth |

Analyzing/Presenting Data/Information All 5 books + 4-hour ET online video course, keyed to the 5 books. |

This thread collects good general advice for analytical work. There will probably be some overlap with our thread Grand truths about human behavior, but the idea here is more toward prescriptive statements about good practices. Such advice should reach beyond the proverbial, and should be referenced, if possible, to those who have in fact performed at a high analytical level.

"Be approximately right rather than exactly wrong." John W. Tukey

"The first principle is that you must not fool yourself--and you are the easiest person to fool." Richard Feynman

Ask questions.

Develop and fine-tune a sense of the relevant, both for identifying the key leverage points in any problem and also for examining large amounts of information to find the rare diamonds in the sand.

Nearly all serious analysis requires multivariate-thinking, comparison-thinking, and causal-thinking. Develop such thinking.

-- Edward Tufte

"No scientist is admired for failing in the attempt to solve problems that lie beyond his competence. The most he can hope for is the kindly contempt earned by the Utopian politician. If politics is the art of the possible, research is surely the art of the soluble. Both are immensely practical-minded affairs." P B Medawar, The Art of the Soluble, 1967.

The choice of problem is often the most important act of all in analytical work: the idea is to find important problems that can be solved. This requires a certain self-awareness on the part of the researcher.

-- Edward Tufte

You have already quoted a short version of John Tukey's aphorism, but I prefer a slightly longer expression of the same idea that he wrote in 1962:

Far better an approximate answer to the right question, which is often vague, than an exact answer to the wrong question, which can always be made precise.

Somewhere he also wrote (but I've been unsuccessful in tracking down where or when I read it),

If a thing is not worth doing at all, it is not worth doing well!

-- Athel Cornish-Bowden (email)

"if it isn't worth doing, it isn't worth doing well." Donald Hebb

Another formulation with a different meaning is "If it isn't worth doing superficially, it isn't worth doing well." This places the idea within the domain of the relevance principle (quick unbiased scanning reduces fruitless pursuit of dead ends). Is there a cite for the reformulation, Kindly Contributors?

The Tukey elaboration on approximation is very good.

-- Edward Tufte

I read Hebb's quotation as a statement about

practice or learning. There are a lot of

things that may not be "worth doing" but that

we do anyway - whether for the sake of novelty,

trial and error, etc.

Perhaps he is telling us not to make these items

a pursuit of perfection - do not invest the time

or energy to do worthless things really well.

-- Tchad (email)

We have a saying around our office that is used when a lot of analytical data is included in a response to a customer that does not give them the answer they need:

"There is no advantage in disappointing a customer in great detail."

-- Keith Dishman (email)

Paraphrasing Robert Anton Wilson:

Understand the difference between a mathematical proof, a proof in the hard sciences like physics, a proof in the social sciences, and a poetic proof.

(And, importantly, understand the validity, usefulness and application of each)

-- Sandy (email)

The reference that I have for the Tukey quotation (which I haven't checked for years, and I no longer have easy access to the journal) is as follows: J. W. Tukey (1962) Annals of Mathematical Statistics 33, 1-67.

-- Athel Cornish-Bowden (email)

"Absence of evidence is not evidence of absence, at least until a good search is done."

The traditional statement (AEINEA), without the qualification at the end, can lead to mischievous evidence-free claims for both absence and non-absence. Thus I have added the qualification here because the more thorough the search for evidence, the better the evidence for absence.

The unmodified traditional statement (AEINEA) was used by Rumsfeld in response to the failure to discover WMD. It was also used by Carl Sagan to reason about extraterrestrial life; on the other hand, the Fermi paradox argues that the absence of ETL evidence is in fact evidence of absence.

-- ET

A common issue in reasoning about evidence is in making inferences or theorizing about smaller-scale mechanisms on the basis of larger-scale empirical observations.

Thus aggregate stock market results are observed, and then inferences are made about what various groups of investors did. Aggregate electoral results are explained by reference to the behavior of individual voters. The problem is that aggregate evidence can be consistent with a diversity of smaller scale disaggregated mechanisms. In social science, this is sometimes called the problem of "ecological correlation" or the "levels of analysis" problem. Usually it is easier to collect aggregated data rather than to collect data at the level of explanation.

Here's a first try at an aphorism:

"Evidence at a larger scale may be consistent with a variety of smaller scale mechanisms."

Here's another try:

"As above, so below is an empirical question, not a reasonable assumption."

Here's an example of the levels of analysis problem, all over the place:

|

Source: Oliver Curry, "One good deed: Can a simple equation explain the development of altruism?," (book review of Lee Alan Dugatkin, The Altruism Equation), Nature 444 (7 December 2006), 683.

-- Edward Tufte

The first image that popped in my head was a

side by side set of distributions:

1) a normal distribution where the average is the most common

response; and,

2) a bimodal distribution where the average is the least common

response.

In the first image, the word that seems to describe it

is "consensus" and the second is more like design by

committee, i.e., the average result does not reflect anyone's

opinion. This could be described as extreme compromise.

Here are a couple more aphorisms for the list:

Analysts who use averages for interpretation cannot expect anything

better than average results.

Average people are ( on average ) content with averages.

Averages hide the trees in the forest.

-- Tchad (email)

I think of "average" in the statistical sense, as an effective estimate, and not in sense of something being mediocre. From another thread, here are some ideas about averages and beyond averages:

"If you know nothing, the decision rule is to use the average (mean or often the median) or use persistence forecasting. To describe something, measure averages and variances, along with deviations from persistence forecasting. Understanding, however, requires causal explanations supported by evidence."

"Average" is meant both in the statistical sense and in the wisdom-of-crowds sense. "Persistence forecasting" is, for example, saying the tomorrow will be like today (and is often a hard forecast to beat).

There might be a grander (although probably more cryptic) way of making the point about the difference between description and causal explanation.

-- Edward Tufte

If all elements in a population are alike, a sample size of 1 (n = 1) is all you need. (I first ran across this probably in R. A. Fisher' book, Statistical Methods for Research Workers).

Otherwise, for n = 1, the estimate of the variance is infinite. n = 1 is much better than n = 0, but that's all it is better than. You never get a greater reduction in estimated variance than when you go from n = 1 to n = 2. (Last sentence is probably from John Tukey.)

The assumption of independence, which makes the mathematical modeling tractable, is rarely true empirically. Sometimes the most interesting substantive issue is the character of the covariances, which many models assume not to exist.

(I recently gave a talk at Stanford about turning points in my work, "An Academic and Otherwise Life, an n =1", and included the remark about the infinite sample variance for n = 1 in my introduction to indicate that there were no sound inferences to be made from my personal narrative. At the end, I went ahead and made an inference, which was that I thought it was a good idea to have turning points throughout one's work--and thus one's life--and not just up to age 30.)

-- Edward Tufte

The inability to spot the flaw in an argument does not necessarily mean that the argument is correct.

As a lawyer, I have seen many lawyers get into arguments with non-lawyers. Usually, the lawyer out-argues the other person (often a spouse or significant other). The non-lawyer simply does not think quickly or logically enough to spot and articulate flaws in the lawyer's argument. Many such lawyers are foolish enough to believe they have won the argument, and that they must therefore be right.

In fact, they have almost always lost the argument, and are many times not at all right. Being unable to spot the flaw right now does not mean you must accept the argument.

-- Patrick Martin (email)

Patrick Martin's comment is very insightful.

One's inability to produce the devastating comeback live during the course of discussion-- l'esprit de l'escalier--is why it is helpful, in deciding serious matters (1) to have the material under discussion distributed in advance, (2) to rehearse the possible exchange in advance, (3) to take a time-out, leave the meeting, escape the groupthink and bullying, go for a long walk down the hall (or up the stairs in the French version of after-the-fact-wit), and ask yourself "What would Richard Feynman do?"

There is also the problem here of wrongly convincing oneself about the validity of one's own argument, and then strengthening one's certainty by selective searching rather than finding the devastating response that defeats one's own position.

Self-correction can be more likely achieved if one has trustworthy sources of criticism and review. Last week I had to make 2 unusually substantial decisions involving matters of evidence and values. Both decisions involved reversal of long-run courses. In one of them I had been moving forward with enthusiasm to do something really stupid until 2 of my colleagues took me aside and crisply reframed the issue so as to induce me to avoid the swamp. In the second decision, 3 colleagues wanted to meet together with me (it must be serious, I thought, since we rarely have systematic meetings) to discuss a problem; instead I met one-on-one with each in an attempt to diversify the input and to allow me to think about the matter piecemeal rather than have to be wise right on the spot. Another strategy for achieving a critical review is make a quick decision and then be willing to change in the face of the regret costs. And sometimes my colleagues undo my inept decisions by simply not doing what I decided.

All this, I hope, works out because the underlying idea is that if you're in a hole, don't dig deeper.

-- Edward Tufte

In the academic world, there is, now and then, a career pattern among those concerned with technical methodology in the social sciences and medicine. Early in the career, they publish methodological critiques of the works of others, then write how-to-do-it-correctly articles (that, say, import econometric ideas into the rest of social science), and finally to turn to substantive research of their own. Sometimes that research is disappointing, or not even published, as the "big book" is eternally forthcoming. This occurs perhaps because of the research paralysis caused by a preoccupation with methodological defects, or because the preoccupation with methods has limited the study of substance, or simply because the scholar's talents are in methods, not substance. The division of scholarly labor (at least in the social sciences) also favors the separation of method from substance. On the other hand, a skilled research analyst can read almost any paper in a broad field, and perhaps in many fields, and testify to the integrity of research process.

One point, then, is that the pursuit of methodological rigor takes time and may well lead to substantial opportunity costs. And, also, that our methods for improving analytical quality should have as a direct goal the production of sound substantive results, not just the production of methodological primers.

-- Edward Tufte

Is there a clear statement somewhere in the medical literature about the difference between a marker variable and a causal variable? Are distinctions to be made among markers, proxies, and spurious correlation?

For example, is active exercise a marker or an indicator of good health, or a cause of good health?

-- Edward Tufte

The principles and detective work of forensic accounting might provide some useful advice for our topic. Last month at my one-day course a forensic accountant mentioned the financial engineering involved in "closing the gap," as a company tries to fudge over shortfalls in financial targets.

Could a Kindly Contributor provide some key references? Is there a standard textbook, a classic article, a collection of case studies?

-- Edward Tufte

Several interesting threads here, that I believe all inter-relate.

Tufte's comment on getting the bad news he needed mirrors a fair bit of business literature (e.g. Bielaszka-Duvernay, Christina, "How to get the bad news you need." Harvard Business Review, Jan 2004). The upshot is that you must have a culture of facing the facts, whatever they may be, and actively engage colleagues and employees in voicing dissent. Jim Collins refers to the Stockdale Paradox (confront the brutal facts and retain faith that you will prevail), which I take as a different slice on the same problem.

Regarding the career path of methodological critique, I have found in the business world that the focus on methodology often grows from a culture that encourages cutting corners, if not outright fraud. Where people are doing a job well, there is rarely any need for such methodological critique.

Similarly, in taking his three colleagues aside separately, Tufte engaged in what the Japanese call nemawashi, which literally means "digging around the root." Applied to this sort of situation, it means actively acquiring differing opinions and quietly building consensus for some change. Effective nemawashi cannot be achieved through structural mechanisms (e.g. through standard operating procedures), rather, it is almost purely a cultural phenomenon.

Which, I believe, all ties together with the following heuristic: it's all about the right people. The "right people" are those with the self-discipline to face the facts and still do a job right. Without them, you'll find yourself mired down in methodological critiques and you still won't get the bad news you need. I suspect that the converse is also true: where one finds methodological critiques or a lack of consensus, one should also look for evidence that intellectual honesty is being supplanted by expediency.

The heuristic is partially taken from Collins' book Good to Great.

-- Tom Hopper (email)

Below, a comment on evidence selection from E. H. Gombrich, Topics of our time: Twentieth-century issues in learning and in art (London 1991), 40. Part of the problem Gombrich raises is that an artistic style practice is used as testimony for local and unique cultural differences (the "designer culture" hypothesis). The counter-examples suggest that universals also operate--and that the Chinese artists and viewers understood the operations of perspective in a manner similar to other humans, despite their unique method of doing artwork perspective in a certain way for some time period centuries ago.

|

-- Edward Tufte

From a recent issue of Science, here is a example of skewed bird distributions--in accord with the principle that the world is often lognormal, or Pareto, or skewed, or long-tailed.

|

|

-- Edward Tufte

Regarding the phrase, "Absence of evidence is not evidence of absence," I appreciate the addition of the important qualifier, "Until a good search is done." A similar cliché to the AEINEA claim is "You can't prove a negative." Pasquerello (1984) noted that "anti-negativism" sentiment is fairly strong but that it's fallacious. When things are large, numerous, and/or dissimilar to neighboring entities, and the search space is well defined -- say, the elephants in the room -- it's just about as easy to show their absence as it is to show their presence, whichever the case may be.

"You can't prove a negative" tends to have a certain surface appeal because quite often, the things under consideration have a poorly defined or impractical search space (e.g, you can't prove that life on other planets doesn't exist because the search space is impractically large -- i.e., the universe), or the things being proposed are poorly defined ("Well, ghosts aren't really like that...")

Pasquerello T. 1984. Proving negatives and the paranormal. The Skeptical Inquirer 8(3): 259-270.

-- Zen Faulkes (email)

Given the sort of people who say "Absence of evidence is not evidence of absence" I'd prefer to hear it with another addition: "Absence of evidence is not evidence of absence, but still less is it evidence of presence".

-- Athel Cornish-Bowden (email)

The discussion of whether absence of evidence constitutes the same thing as evidence of absence, it is perhaps worth citing a methodology of proof, in particular the use of truth trees (see, for example, Wilfred Hodges' book, Logic). Under truth trees, the negative of the conclusion is stated and then by the use of truth trees, one tries to prove that this negative assertion can only generate inconsistent statements under formal predicate or propositional calculus. So, to prove the evidence of absence, one would need to assert that there is no evidence of absence and prove that this is logically impossible.

-- Will Oswald (email)

[Also posted to our thread on evidence corruption]

Talking, rational, telepathic animals one more time. See the article on the African Grey Parrot by Robert Todd Carroll, The Skeptic's Dictionary at

http://skepdic.com/nkisi.html:

Richard Feynman's principle "The first principle is that you must not fool yourself--and you are the easiest person to fool" might be revised to cover the propagation of foolishness:

"The first principle is that you must not fool yourself (and you are the easiest person to fool), but also that you must not attempt to fool others with your foolishness."

-- Edward Tufte

Questions

Your suggestion that people "ask questions" is a good one. In a course I teach about a particular data analysis toolset, I offer a refinement:

Ask the right question. In the typical case you don't know which is right question, so ask lots of questions.

This advice informs one's choice of tools. The faster you can ask and answer questions, the faster you'll arrive at the really interesting questions.

Best,

-J

-- John Rauser (email)

Regarding the difference between a marker variable and causal variable in medicine. Depending upon one's condition, the ability to actively exercise might be considered an indicator of good / improving health (as in the ability to regularly exercise); Or as a causal agent of good health (as regular and vigorous exercise has been known to extend lifespan and change the level of certain markers (i,e cholesterols). Another words, markers provide us with tools by which to estimate the likelihood of a serious adverse event / longer lifespan, and create a prognosis. Statements in the literature pertaining to drug development (from the Biomarkers Definition Working Group, http://ospp.od.nih.gov/biomarkers/ClinicalParmacology.pdf) have been developed regarding clinical (efficacy) endpoints as distinguished from surrogate endpoints - of which biomarkers (or markers) are a part. Here the goal is assessment of the benefit versus risk of a therapeutic intervention (such as change in behavior via exercise). Evaluation requiring long periods of time (e,g "a lifetime") or large numbers of clinical subjects may make some clinical efficacy endpoints unfeasible. Thus the need / demand for markers which are reliable predictors of outcome. Developing biomarkers as surrogate endpoints for clinical endpoints is a long and laborious process in methodology development, as can be seen from the above paper's graphic, Figure 1.

-- Peter Gompper (email)

"Just get to the verb." Robert Altman

-- Edward Tufte

Rutherford said, "All science is either physics or stamp collecting."

He may have meant it pejoratively; I don't know the context. I will take it otherwise and interpret it in two lessons. The first also happens to be the mantra of my advisor from graduate school: Be quantitative. The second is to be mindful that one phenomenon may be described by the mathematics that applies to another, even if it's not in your field of study.

I won't claim to have any familiarity whatsoever with the physiology literature, but it seems to me that exercise is in a positive feedback loop with good health, i.e., it is both a marker and a cause. (That's positive in the sense that the sign is the same, not that the universe is saying "atta boy!") Good health has such a similar relationship with so many different characteristics that I imagine picking out the specific and minuscule biochemical trigger after the fact is difficult at best. Which model is more useful is left as an exercise to the reader.

Apropos another thread, I note with measured glee the sidebar in the Harvard Business Review article cited above ("How to get the bad news you need") entitled "Communication Dashboard." I would think if you want to encourage people to break bad news to their superiors, you wouldn't have them do so through as ridiculously simplified and impersonal a mechanism as a red stoplight on a web page.

-- Patrick Carr (email)

Good list of cognitive biases

Wikipedia List of Cognitive Biases (Please read this before continuing below.)

After reading through the list, one wonders how people ever get anything right. That's called the "cognitive biases bias," or maybe the "skepticism bias" or "paralysis by analysis."

There's also the "bias bias," where lists of cognitive biases are used as rhetorical weapons to attack any analysis, regardless of the quality of the analysis. The previous sentence then could be countered by describing it as an example of the "bias bias bias," and so on in an boring infinite regress of tu quoque disputation, or "slashdot."

The way out is to demand evidence for a claim of bias, and not just to rely on an assertion of bias. Thus the critic is responsible for providing good evidence for the claim of bias and by demonstrating that the claimed bias is relevant to the findings of the original work. Of course that evidence may be biased. . . . And, at some point, we may have to act what evidence we have in hand, although such evidence may have methodological imperfections.

The effects of cognitive biases are diluted by peer review in scholarship, by the extent of opportunity for advancing alternative explanations, by public review, by the presence of good lists of cognitive biases, and, most of all, by additional evidence.

The points above might well be included in the Wikipedia entry, in order to dilute the bias ("deformation professionnelle") of the bias analysis profession.

In Wikpedia, I particularly appreciated:

"Deformation professionnelle is a French phrase, meaning a tendency to look at things from the point of view of one's own profession and forget a broader perspective. It is a pun on the expression, "formation professionelle," meaning "professional training." The implication is that, all (or most) professional training results to some extent in a distortion of the way the professional views the world."

Thus the essay "The Economisting of Art" is about what I view as the early limits in the microeconomic approach to understanding the prices of art at auction.

In my wanderings through various fields over the years, I have become particularly aware of deformation professionnelle and, indeed, have tried to do fresh things that break through local professional customs, parochialisms, and deformations.

-- Edward Tufte

Dear ET,

Great thread that I hadn't seen before...

I would recommend that you also read the Wikipedia entry on Pareidolia, which is defined as "a psychological phenomenon involving a vague and random stimulus being mistakenly perceived as recognizable". Its interest in the psychological literature is as the basis of the famous Rorschach inkblot test. It is also the basis of the "Virgin mary on toasted cheese sandwich" and "Jesus christ on an Oyster shell" type of images you can find via Google image search. It was nicely discussed by Michael Shermer in Scientific American May 2005 (http://tinyurl.com/awm2e). My belief is that during evolution the cognitive ability that Pareidolia is based on was of high selective value for early humans (its about seeing patterns in a noisy background).

In my own field of quantitative microscopy this phenomena is particularly pervasive and has to be dealt with rigorously and early in training. Researchers are very adept at seeing a particularly microstructural feature at high magnification and then rationalising what they see as being in favour of or against their pet hypothesis. Beware the pretty pictures! Key evidence that a pretty picture is about to be used in a scientific paper includes the phrase; "A typical micrograph is presented in Figure 1". It is almost never a typical micrograph. Really good analytical reasoning for any inference based on microscopic images must (at least) answer the following issues;

(1) What process was used by design to obtain the sample that was imaged. (2) What precautions has the researcher taken to ensure that representative images were used for measurement. (3) How many measurements were taken and has any consideration been given to the number required to establish good evidence of the conclusion. (4) If the image represents a 2D section through the 3D structure has the researcher given a good explanation of how the 2D measurements she has made relate to the 3D structure of interest. (5) What have the image analysis tricks (PhotoShopping) done to the images and evidence within them.

etc.

The scientific discipline of drawing high quality evidence based conclusions from microscopy is known as stereology (pretty good Wikipedia entry on it). And as one famous stereologist Prof Luis Cruz-Orive once put it "Quantifying is a committing task" (Toward a more objective biology. Neurobiology of aging Vol 15 pp 377-378).

best wishes Matt

-- Matt R (email)

Do biases usually work?

Even though at times bias X leads to incorrect conclusions, how often is bias X an efficient and even wise way of reducing decision costs? Instead of turning this question into a debate about the definition of "bias," can the costs of a bias be turned into empirical questions: How frequently does bias X lead to wrong conclusions or to conclusions that need to be adjusted? Is bias X chronically harmful? What is the magnitude of the bias? Is the bias so corrupting that it has produces incorrect conclusions? These questions grow from the principle that if something is not worthwhile doing superficially, then it is not worthwhile doing profoundly--an idea that reduces decisions costs. Sometimes the announcement that work is biased finesses the question of whether the work is correct. Of course source credibility is an element in judging whether a work is likely to be correct, but sometimes even a biased analysis may arrive at the correct answer.

-- Edward Tufte

More ways to be wrong than right.

Here is a nice meta list of all the mistakes one can make in experimental design and interpretation at Peter Norvigs website (http://norvig.com/experiment-design.html).

Matt

-- Matt R (email)

A sense of the relevant

"The art of being wise is the art of knowing what to overlook." William James, The Principles of Psychology, 1890.

-- Edward Tufte

It's more complicated than that

An interesting account in the New York Times: http://www.nytimes.com/2007/07/01/business/yourmoney/01frame.html?ref=business

-- Edward Tufte

Even though at times bias X leads to incorrect conclusions, how often is bias X an efficient and even wise way of reducing decision costs?

There is a very similar problem in product development, where there's always a conflict between making data-driven decisions and acting quickly based on the best available expert judgement (which is wrong a surprisingly high percentage of the time). You can't always wait for the data to make a decision, but you also don't want to make the wrong decision.

After some thought and discussions with people who are more experienced than me (and probably smarter), the best solution seems to be economic: estimate the cost of delaying a decision (to collect data and make an appropriate decision) vs. making the wrong decision (generally, this might be described as the cost of rework, though there may be tooling, testing, and delays associated with it). This is pretty standard risk analysis, really. A company can prepare for such situations by preparing a basic economic model for each project in advance, and deriving appropriate decision rules that can be applied by project managers or even team members on-the-fly (e.g. have a standard cost of delaying a project by one month).

One of the keys to this sort of economic model is that, as has been discussed elsewhere, it is better to be approximately correct than exactly wrong. An approximately correct answer that everyone can use is much better than having the variance of everyone making their own guesses, or an exact answer that's too complex for the people on the front lines to work with.

Donald Reinertsen, author of Managing the Design Factory, has talked about this approach in his books and seminars, and is probably the primary basis for my thinking here.

Of course, there is an underlying assumption here that the organization can support such a rational approach to decision-making, including rewarding the "bad" outcomes arrived at by rational decisions (or, rather, by the agreed-upon process). A lot of managers are prone to punishing "failures" and rewarding "successes," or, in technology-oriented companies, rewarding risk-taking (quick decisions) over more careful or rational approaches. I think the rational approach requires managers to take a systems approach to their organization and its processes.

-- Tom Hopper (email)

John von Neumann

"There is no sense in being precise, when you don't even know what you're talking about.''

John von Neumann,

quoted by professional gambler Barry Greenstein, Ace on the River.

Reference via J. Michael Steele.

ET variation, however:

"There is no sense in making approximations, when you don't even know what you're talking about."

-- Edward Tufte

Charles Darwin via Warren Buffett

In a very informative essay on baroque financial instruments (such as derivatives) John Lanchester quotes Warren Buffett quoting Charles Darwin:

No matter how financially sophisticated you are, you can't possibly learn from reading the disclosure documents of a derivatives-intensive company what risks lurk in its positions. Indeed, the more you know about derivatives, the less you will feel you can learn from the disclosures normally proffered you. In Darwin's words, "Ignorance more frequently begets confidence than does knowledge."Lanchester's London Review of Books essay "Cityphilia" (3 January 2008) is available here

-- Edward Tufte

I like the point about N=2 being a huge jump ove N=1 and N=1 being better than N=0. I think that in many business problems, going out and getting two real data points is a significant improvement and moves teams and management past endless arguments from theory. The important thing is to buckle down and get a couple data points.

Also, I find that in addition to getting info on the immediate question, one usually learns things about other issues, including issues that were not being pushed on as part of solution space, but are pertinent. Also, one usually learns something about how to learn (how to prospect for info, for relevant info).

Note: I'm thinking about strategic issues, often requiring an interview or even a visit to a customer, competitor, supplier, etc. (Although could be consulting secondary lit or doing an analysis or the like.

P.s. I'm finding more and more how powerful google is. Not at all that it should be the end point. But when people bring an all new question or issue, I will sometimes do a google search while they're talking. The sooner evidence starts being brought into theorizing and issue analysis and god help us "day one solutions", the better.

-- TCO (email)

The Oxford Dictionary of Statistics states that both W. Edwards Deming and John Tukey "were fond of quoting" FJ Anscombe's advice "that it is better to 'realize what the problem really is, and solve that problem as well as we can, instead of inventing a substitute problem that can be solved exactly, but is irrelevant.'" Many statistics students are familiar with Anscombe's regression data (four sets of data with remarkably similar stummary statistics but distinctly different scatter plots), and it just so happens that Tukey and Anscombe were brothers-in-law (how cool is that?).

-- Joe McCaughey (email)

Anscombe's Quartet appears on the first page of The Visual Display of Quantitative Information.

-- Derek Cotter (email)

Groupthink: The wisdom of crowds or the tyranny of the mob?

Two Yale colleagues, Robert J. Shiller and Irving L. Janis, on hedging one's position:

http://www.nytimes.com/2008/11/02/business/02view.html?partner=permalink&exprod=permalink

-- Edward Tufte

An interesting essay by Michael Shermer,

"Stage Fright".

From the stages of grief to the stages of moral development, stage theories have little

evidentiary support.

-- Edward Tufte

I frequently reference George Box:

"Essentially, all models are wrong, but some are useful." - Box, George E. P.; Norman R. Draper (1987), Empirical Model-Building and Response Surfaces.

-- Paul Parashak (email)

Fermi problems are excellent practice for developing that 'sense of the relevant' in quantitative work.

-- Geoffrey Brent (email)

Above ET mentioned "detective work of forensic accounting" and asked for a definitive reference. Perhaps as a by-product of too many mystery novels as a kid and far, far too many interactions with accountants as an adult, my mind jumped immediately to the "definitive detective". Who better to provide advice on analytical methods than Sherlock Holmes?

- "In solving a problem of this sort, the grand thing is to be able to reason backwards. That is a very useful accomplishment, and a very easy one, but people do not practice it much. In the everyday affairs of life it is more useful to reason forward, and so the other comes to be neglected. There are fifty who can reason synthetically for one who can reason analytically." (A Study in Scarlet)

- "One should always look for a possible alternative and provide against it. It is the first rule of criminal investigation." (The Adventure of Black Peter)

- "It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of theories to suit facts." (The Sign of Four)

- "We balance probabilities and choose the most likely. It is the scientific use of the imagination." (The Hound of the Baskervilles)

- "We must look for consistency. Where there is want of it we must suspect deception." (The Problem of Thor Bridge)

- "Singularity is almost invariably a clue. The more and featureless and commonplace a crime is, the more difficult it is to bring it home." (The Boscombe Valley Mystery)

- "It is of the highest importance in the art of detection to be able to recognize out of a number of facts which are incidental and which vital. Otherwise your energy and attention must be dissipated instead of being concentrated." (The Reigate Squire)

- "Circumstantial evidence is a very tricky thing. It may seem to point very straight to one thing, but if you shift your own point of view a little, you may find it pointing in an equally uncompromising manner to something entirely different." (The Boscombe Valley Mystery)

- "They say that genius is an infinite capacity for taking pains. It's a very bad definition, but it does apply to detective work." (A Study in Scarlet)

- "Nothing clears up a case so much as stating it to another person." (Silver Blaze)

Along the way I've developed my own small contribution, Clark's Corollary to Doyle's Law: "When you have eliminated the impossible and nothing remains, whatever should fill the resulting vacuum is probably the truth."

-- Brian Clark (email)

Anecdotes vs.data

The plural of anecdote is not data.

See Jack Shafer's latest "Bogus trend story of the week" here.

-- Edward Tufte

The plural of anecdote?

“Anecdata”

This word yielded over 2.6 million hits on Google today. A quick scan revealed this early (March, 1992) occurrence from wordspy.com during a Paul Solman interview with David Wyss on the McNeil/Lehrer News Hour.

Word Spy offers many clever, often humorous neologisms.

-- Jon Gross (email)

Dear ET,

I have just begun pulling together material for a book I am writing on "Integrated Quantification" and was reviewing some of my favourite web sources - including of course Ask E.T.

In this thread I spotted the line; "A common issue in reasoning about evidence is in making inferences or theorizing about smaller-scale mechanisms on the basis of larger-scale empirical observations." The issue is all about experimental noise - there are an infinity of small scale structures that are compatible with the larger scale observation to within the noise level.

This reminded me of one of the key issues in a field of scientific inference known as Maximum Entropy data processing. One would like to reconstruct a distributed positive, additive quantity (a spectrum or image typically) whilst having some integrated observed quantities (e.g. sums or integrals over a number of cells in the image). In general this is impossible, however, if the researcher has a model of the data generation process of the experiment (quite often the case in the physical sciences) then the Maximum Entropy approach can be used very succesfully to achieve this. In fact the method applies a constraint on the allowed reconstructions and says that we seek the smoothest reconstruction possible which is consistent with the observed data. The output is the full posterior probability distribution over reconstructed images.

The ramifications of the above are pretty wide ranging and reach into the depths of Bayesian approaches to scientific inference. Interested readers could follow up with the following;

(a) Here is an outline overview = http://cmm.cit.nih.gov/maxent/letsgo.html

(b) There is a good, but older, introductory book which is well worth reading = "Maximum Entropy in Action - A Collection of Expository Essays. Edited by Brian Buck and Vincent A. Macaulay" Oxford University Press.

(c) An essay by John Skilling (one of key thinkers in Maximum Entropy) extending the argument and discussing "Probabalistic Data Analysis" = www.maxent.co.uk/documents.html.

(d) The outstanding personal contribution of the great (and relatively unknown) American physicist Ed Jaynes should be noted - a nice page at Washington University gives some leads (http://bayes.wustl.edu/). His life work is contained in a book called "Probability Theory: The Logic of Science" available from Amazon.

Happy reading

Matt

-- Matt R (email)

Dear E.T.

As a follow up to my last post your readers may be interested in this article in which Ed Jaynes tries to describe a general approach to the problem of predicting the Macroscopic response of a system when the microscopic phenomena is well know. As Jaynes puts it he tackles two questions "Why is it that knowledge of microphenomena does not seem sufficient to understand macrophenomena?" and "Is there an extra general principle needed for this?".

http://bayes.wustl.edu/etj/articles/macroscopic.prediction.pdf

Best wishes

Matt

-- Matt R (email)

Management reasoning

Very thoughtful discussion by Eduardo Castro-Wright, vice chairman of Wal-Mart Stores, New York Times interview, May 24, 2009:

http://www.nytimes.com/2009/05/24/business/24corner.html

-- Edward Tufte

Dear ET,

Here, via the "Predictably Irrational" blog of Dan Ariely (http://www.predictablyirrational.com), is Asimov on Evidence;

Matt ----------------------------------------

"Don't you believe in flying saucers, they ask me? Don't you believe in telepathy? - in ancient astronauts? - in the Bermuda triangle? - in life after death?

No, I reply. No, no, no, no, and again no.

One person recently, goaded into desperation by the litany of unrelieved negation, burst out `Don't you believe in anything?'

`Yes,' I said. `I believe in evidence. I believe in observation, measurement, and reasoning, confirmed by independent observers. I'll believe anything, no matter how wild and ridiculous, if there is evidence for it. The wilder and more ridiculous something is, however, the firmer and more solid the evidence will have to be."

Isaac Asimov, The Roving Mind (1997), 43

-- Matt R (email)

Just found this very thought provoking series of articles from Wired August 2008 (http://www.wired.com/science/discoveries/magazine/16-07/pb_theory). In the lead article Chris Anderson, the editor-in-chief of Wired magazine, proclaims, "The End of Theory: The Data Deluge Makes the Scientific Method Obsolete". He then goes on to describe how Google quality data analysis had made the desire to understand causation obsolete and that correlation was good enough. This is a deeply thought provoking stance for all active scientists and statisticians to consider.

Personally I think it's hubris. I have worked with experimental data for over 20 years and in my experience simply having more is not the issue. What the data was collected for, by whom and when and how good it is will always be critical in science. The article does however cause some concern; the current generation of undergraduates in science and statistics will have never had to suffer the privations of obtaining tricky experimental data or understand some of the pitfalls of correlation and may well fall for this kind of argument.

-- Matt R (email)

Wigmore Evidence Charts

Dear ET,

I have been reading a fascinating book by two probabilists Joseph Kadane and David Schum called "A probabilistic analysis of the Sacco and Vanzetti Evidence" about the famous US murder case from the 1920's.

One interesting feature of the book is their use of a particular type of graphic which they call a 'Wigmore Evidence Chart'. They also show a complete appendix of these charts summarising the Sacco and Vanzetti evidence. Wigmore was a US jurist who developed a probabalistic approach to proof (he published this approach from 1913 onwards) and is seen by the authors as the earliest exponent of what today are called 'inference networks'.

I have not seen this type of chart before and cannot remember if you mention them at all in any of your books. They are interesting but visually a bit ropey, they could really do with improvements in clarity, line weights, text integration and colours etc.

However, they are not dead; a quick search on Google took me to this 2008 paper by Bruce Hay of Harvard Law School (http://lpr.oxfordjournals.org/cgi/content/abstract/7/3/211). The paper is from the journal "Law, Probability and Risk" and the Abstract says the following;

"Wigmore's `The Problem of Proof', published in 1913, was a path-breaking attempt to systematize the process of drawing inferences from trial evidence. In this paper, written for a conference on visual approaches to evidence, I look at the Wigmore article in relation to cubist art, which coincidentally made its American debut in New York and Chicago the same spring that the article appeared. The point of the paper is to encourage greater attention to the complex meanings embedded in visual diagrams, meanings overlooked by the prevailing cognitive scientific approaches to the Wigmore method."

I will be ordering this paper and reading around it, but in the meantime thought you or your readers may enjoy this type of chart or indeed have more knowledge about them than I do.

Best wishes

Matt R

-- Matt R (email)

fact checking

Recently I drew your attention to an article in Wired - August 2008 (http://www.wired.com/science/discoveries/magazine/16-07/pb_theory).

I mentioned that I thought it was hubris. I have just found out that this was an understatement. See this interesting article from Peter Norvig (http://norvig.com/fact-check.html) who not only explains how he had been misquoted in the Wired article, but also in passing pays homage to the legendary 'fact checking' of the New Yorker magazine.

-- Matt R (email)

Statistics Amongst the Liberal Arts

Dear ET,

I have just found a very thought provoking article from David Moore in 1998, "Statistics Amongst the Liberal Arts". His argument is that modern statistics is much less about doing the calculations and more about 'grand ideas'.

Some quotes;

" I find it hard to think of policy questions, at least in domestic policy, that have no statistical component. The reason is of course that reasoning about data, variation, and chance is a flexible and broadly applicable mode of thinking. That is just what we most often mean by a liberal art."

and

"Here is some empirical evidence that statistical reasoning is a distinct intellectual skill. Nisbett et al. (1987) gave a test of everyday, plain-language, reasoning about data and chance to a group of graduate students from several disciplines at the beginning of their studies and again after two years. Initial differences among the disciplines were small. Two years of psychology, with statistics required, increased scores by almost 70%, while studying chemistry helped not at all. Law students showed an improvement of around 10%, and medical students slightly more than 20%. The study of chemistry or law may train the mind, but does not strengthen its statistical component."

You can get the full paper here = http://www.stat.purdue.edu/~dsmoore/article.html

[ Editor: A direct link is: http://www.stat.purdue.edu/~dsmoore/articles/LibArts.pdf ]

-- Matt R (email)

From the same comic, on the proper display of large numbers ... http://xkcd.com/558/

-- Michael Round (email)

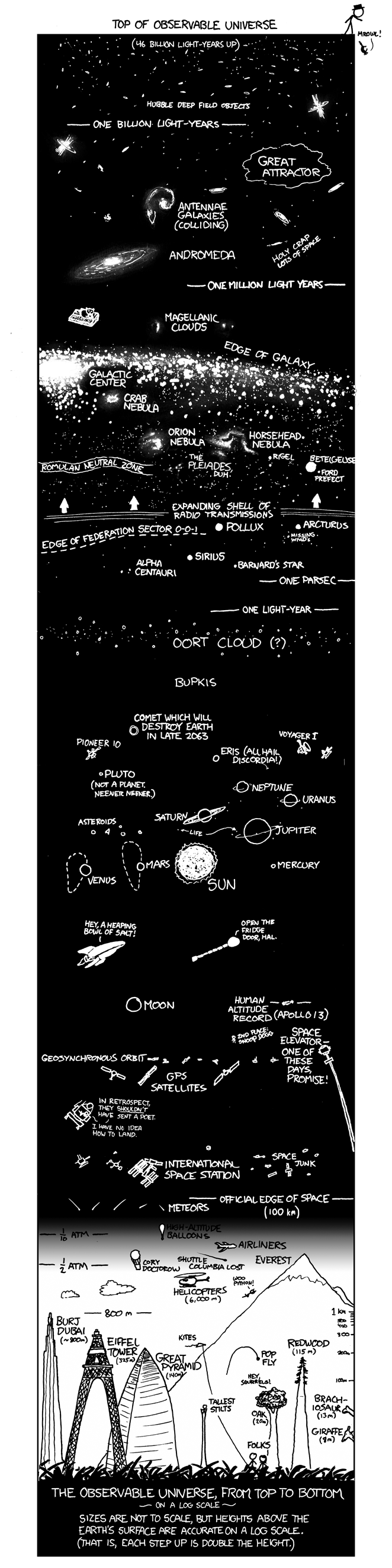

Here's a Tuftean image from XKCD: the obserable universe in 2647 pixels (using log scale): http://xkcd.com/482/

-- Sam Penrose (email)

Dear ET,

Browsing my copy of Feynmans lectures on Physics Vol 1 (admittedly to look at the page design). Page 1-1 has the following;

"The principle of science, the definition, almost, is the following: The test of all knowledge is experiment."

This still looks good to me after all these years.

Matt

-- Matt R (email)

The Principle of Science

It seems to me that The Deep Principle of Science is this: every empirical observation is a manifestation of the operations of Nature's universal laws. Thus the physical sciences are distinguished from the social sciences by the marvelous guarantee that whatever is observed in physical science is a product of universal laws.

And the core principle in scientific verification is this: theories are confirmed or disconfirmed by empirical observation.

In Feynman's statement, if "experiment" means what is usually means--the systematic manipulation of causes (to see their effects) in otherwise tightly controlled situations--then that would place astronomy, weather, and cosmology outside of Feynman's principle of science. In those fields, manipulations typical of experiments are generally impossible.

-- Edward Tufte

Dear ET,

I have been musing on your statement in Beautiful Evidence that "Science and art have in common intense seeing, the wide-eyed observing that generates empirical information."

In particular I have been trying to find an analytical expression of this idea of Intense Seeing.

I arrived at Intense Seeing via a particular and contingent route; from quantitative microscopy. This routing both colours my view and provides me with a chance to describe an analytical insight into the whole area. In quantitative microscopy there are a number of issues that have to be tackled to make progress, one of which is the `reducing fraction' problem. It's a really obvious problem; the higher the magnification you are using to resolve the details you are looking at, the lower the fraction of the whole object you can see in any one `field of view' (Unbiased Stereology. Howard & Reed 1998).

It can be illustrated in a number of ways but the figure shown below is a very simple two dimensional example.

Note that however attractive the Eames Powers of Ten book is, it is quite disorienting. Even increasing the linear magnification by factors of 2, after a small number of iterations, leads to a vanishing fraction of the original area. The 8x gives a 1/64 th; keep going another two powers of two and you get to 32x magnification, which is a /1024 th of the original area (in three dimensions things are obviously much worse).

The above thinking has led me to try and formulate an 'Intense Seeing Razor' similar to Ockhams Razor, the following is my current formulation;

Magnify your Intensity of Seeing enough to gain new insights; but no more.

Best wishes

Matt R

-- Matt R (email)

Readers of this thread will be delighted by two books by MIT academic Sanjoy Mahajan (http://mit.edu/sanjoy/www/). Mahajan is a maths & physics graduate of Cambridge University UK who has spent much of the last 10 years developing a number of 'order of magnitude' estimation approaches. These are a considerable extension of the Fermi type of problem beloved of physicists.

These books are now available as an MIT course called Art of approximation in science and engineering - here = http://mit.edu/6.055/ and a book published by MIT Press called Street Fighting Mathematics - here = http://tinyurl.com/32r3pb9.

As an example, see the Art of Approximation's use of hierarchical trees to illustrate how to break down a problem and his analysis of how the error in a guestimate can reduce by orders of magnitude if you use a divide-and-conquer approach. The image shows his three different estimates of the pit spacing on a CD.

An example of his style is the following;

"This multiplication is simplified if you remember that there are only two numbers in the world: 1 and `few'. The only rule to remember is that few squared = 10, so `few' acts a lot like 3."

Best wishes

Matt

-- Matt R (email)

Dear ET,

Here is a problem that I have been aware of for a long time under the name of "change of support problem" (its name from field of mathematical morphology and integral geometry) - I recently found out that within spatial statistics and GIS it has another special name; Modifiable Areal Unit Problem (MAUP). The basic problem is that for spatial data, such as Health outcomes recorded by zip-codes or counties, socio-demographic data from Census tracts, safety or health exposure estimates within a region of suspected source etc etc, statistical inference changes with scale.

A classic early paper is Gehlke and Biehl (1934) who found that the magnitude of the correlation between two variables tended to increase as districts formed from Census tracts increased in size.

Waller & Gotway (2004) describe it as a "geographic manifestation of the ecological fallacy in which conclusions based on data aggregated to a particular set of districts may change if one aggregates the same underlying data to a different set of districts".

The paper by Openshaw and Taylor (1979) described how they had constructed all possible groupings of the 99 Counties in Iowa into larger districts. When considering the correlation between %Republican voters and %elderly voters, they could produce "a million or so" correlation coefficients. A set of 12 districts could be contrived to produce correlations that ranged from -0.97 to +0.99.

More here = http://www.samsi.info/200304/multi/cgcrawford.pdf

References.

Gehlke, C. E. and K. Biehl (1934). Certain effects of grouping upon the size of the correlation coefficient in census tract material. Journal of the American Statistical Association, supplement 29, 169-170.

Openshaw, S. and P. Taylor (1979). A million or so correlation coefficients. In N. Wrigley (Ed.), Statistical Methods in the Spatial Sciences, London, pp. 127-144. Pion.

Waller, L.A. and C.A. Gotway. 2004. Applied Spatial Statistics for Public Health Data. Hoboken, NJ: John Wiley & Sons.

Best wishes

Matt

-- Matt R (email)

The Principle of "limited sloppiness": If you are too sloppy, then you never get reproducible results, and then you never can draw any conclusions. But if you are just a little sloppy, then when you see something startling you....nail it down.

Max Delbruck

Cited in The FASEB Journal. 2009;23:7-9.

Discovery in the lab: Plato's paradox and Delbruck's principle of limited sloppiness.

Frederick Grinnell

-- Matt R (email)

Dear ET,

I have been trying to get the simplest and clearest statement of something I call the fundamental dilemma of magnification.

Even low power magnification can be a really useful technique for intense seeing. Magnification reveals to our naked eye new levels of detail of an object or scene. A simple example of magnification is shown in the Figure below. Here a low power magnifying glass (2 x) shows part of the surface of a page of text, at a higher magnification than we could see otherwise.

However, as a simple consequence of the design of the magnifying glass only the region of the text that is shown in the magnifying glass is at higher magnification. The physical edge of the magnifier limits our view of the complete object. We see more clearly in the higher magnification observation window we have onto the text, but in total we see less of the text. Once we have a situation where there is an artificial observation window we no longer see the whole object in our field of view - we have taken a sample and now we have to take carefully the issues involved in geometrical sampling.

This is the fundamental dilemma of magnification.

Note that this dilemma is also the case, but perhaps less obviously, for all practical optical instruments, such as cameras, microscopes and telescopes. By design they all have to impose an artificially limited observation window on the magnified view of the object or scene.

Matt

-- Matt R (email)

Seeing as this thread had a lot of contributions from scientists or businesspeople, I thought that readers may be interested in some ideas from the legal discipline. Here is Professor Paul Gewirtz on the importance of objective reasoning:

"Judicial power involves coercion over other people, and that coercion must be justified and have a legitimate basis. The central justification for that coercion is that it is compelled, or at least constrained, by pre- existing legal texts and legal rules, and by legal reasoning set forth in a written opinion. From this perspective, the exercise of judicial power is not legitimate if it is based on a judge's personal preferences rather than law that precedes the case, on subjective will rather than objective analysis, on emotion rather than reasoned reflection." ("On '1 Know It When I See It"', Yale Law Journal, vol 105 (1996) 1023, at p 1025).That was response to the reasoning of Justice Stewart of the US Supreme Court in Jacobellis v Ohio (1964) 378 US 184 at 197:

"I have reached the conclusion ... that, under the First and Fourteenth Amendments, criminal laws in this area are constitutionally limited to hard core pornography. I shall not today attempt further to define the kinds of material I understand to be embraced within that shorthand description, and perhaps I could never succeed in intelligibly doing so. But I know it when I see it, and the motion picture involved in this case is not that."I also like this quote about experts from Chief Justice Gleeson of the High Court of Australia in HG v R (1999) 197 CLR 414 at [44]:

"[i]t is important that the opinions of expert witnesses be confined ... to opinions which are wholly or substantially based on their specialised knowledge. Experts who venture "opinions" (sometimes merely their own inference of fact), outside their field of specialised knowledge may invest those opinions with a spurious appearance of authority, and legitimate processes of fact-finding may be subverted."

-- Daniel Emkay (email)

"In business we often find that the winning system goes almost ridiculously far in maximizing and or minimizing one or a few variables -- like the discount warehouses of Costco." -- Charlie Munger*

When trying to effect a major change in a system, look for small, but significant, points of leverage rather than broad advantage. Just like power laws in science, the majority of activity often comes from the minority of effectors.

Coincidentally, the strategy of applying strength to a targeted point in a system is also a guiding principle in most Japanese martial arts.

-- Brandon Shockley (email)

|

||||||